A Multi-Vendor Marketplace Architecture on AWS Cloud Services

I am starting a series of blog posts on how I built a multi-vendor marketplace for stock SVG images on AWS Cloud services. In the series I will talk about the architecture, tech stack, do some deep dives into specific problems and solutions, and share some of what I have learned. A big part of the challenge has been insuring reliability, scalability, and peformance on a limited budget.

The Tech Stack

We started off hosting the Next.js front-end on Vercel, which makes hosting Next.js apps a breeze, but the cost was more than we could absorb at this stage. Totally reasonable price, but outside of our budget. We opted to host on AWS LightSail, instead. We liked it for the low cost and makes it easy to migrate to a more powerful instance or even to EC2 if we need to.

We Dockerized the front-end and set up a custom GitHub CI/CD workflow. When we commit to our develop or production branch, the appropriate workflow is triggered and the code is deployed to the Lightsail instance. We rotate three Docker images, so if a deployment fails, we can roll back to the last good image. When a new build is deployed, the oldest image is removed, and the new image is tagged as latest.

For our dev environment we use LightSail for both front- and back-ends. The production front-end also runs as a Docker container on AWS LightSail with the addition of CloudFront CDN + WAF. The production back-end is hosted on AWS EC2 and is also Dockerized to keep all of our environments consistent. We use Nginx as a reverse proxy to route incoming traffic to the appropriate Docker container. We can use Nginx, WAF, and our Load Balancer to filter out malicious or harmful traffic by IP address, user agent, and other criteria.

We built the back-end REST API using Express.js + PostgreSQL (hosted on AWS RDS), and Objection.js as the ORM. The API runs behind an AWS Application Load Balancer with a hot/cold standby setup in case of failure. We use ElasticSearch (hosted by Elastic.co) for search, and Logstash (running on an EC2 instance) to ingest product data, set up as Postgresql views, from the database.

We use AWS S3 to store the millions of SVG, PNG, WebP images, and ZIP archived downloadable products. We use CloudWatch for logging and monitoring, and AWS EventBridge to trigger events in the back-end. We also use AWS SQS for asynchronous processing of images and other tasks, and AWS SNS for notifications.

Image Ingestion Pipeline (Serverless)

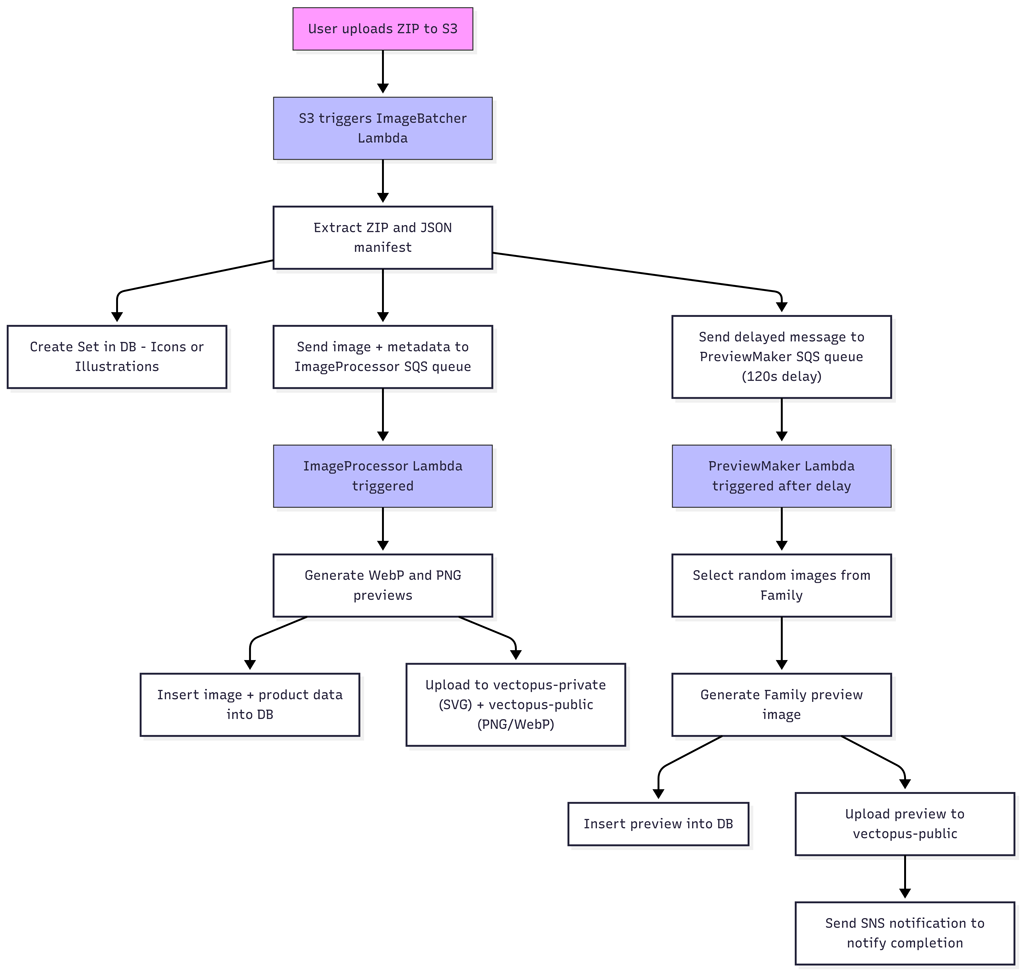

Our product/image ingestion pipeline runs as a serverless application built with AWS EventBridge, Lambda, S3, SQS, and SNS. When contributors upload their icon and illustration sets, the process kicks off asynchronously, processing the images in the background without blocking the user experience.

Images are uploaded to S3 as a ZIP archive with a JSON manifest containing relevant metadata. The S3 bucket triggers a Lambda function (ImageBatcher) that extracts the archive, creates a new product (Icon or Illustration Set) in the database, and sends each individual image + metadata to an SQS queue (ImageProcessor queue). A second delayed message is sent to another SQS queue (PreviewMaker queue). I'll explain this second queue in a moment.

The ImageProcessor queue triggers a second Lambda (ImageProcessor) that creates multiple image previews in various sizes and formats (WebP and PNG), then inserts the individual images and icon or illustration product data into the database.

The PreviewMaker queue triggers the PreviewMaker Lambda after a 120-second delay to let the individual images time to finish processing. The PreviewMaker creates updated preview images for the Family the new icons and illustrations belong to. It does this by randomly selecting a configurable number of images from all of the icons and illustrations from all of the Sets in that product Family. The entire process — minus the 2-minute delay — takes under a minute. Typically by the time the user finishes creating the new product in the Contributor UI, the images are already processed and ready to display.

Up Next: Using Go worker threads to process millions of images

In the next post I will deep dive into how we benchmarked Node, Python, and Go to process millions of images. We ultimately built a threaded CLI batch processor in Go that turned a multi-day job into one that took less than an hour. It’s a good example of how the taking a step back, challenging assumptions and doing the math can ultimately save a lot of time.